Stetig steigende Datenmengen verlangen nach effektiven Interaktionsstrategien um komplexe Informationsräume bedienbar zu gestalten. Diese Arbeit stellt anhand des Beispieles von Google Earth einen neuartigen Ansatz vor, der die Kartennavigation durch die Kombination von Fußeingaben und Blicksteuerung untersucht. Die Hände führen primäre Aufgaben der Manipulation und Selektion von Objekten aus, während die blickgestützte Fußinteraktion eine unabhängige, parallele und schnelle Ausführung der Navigation auf der Karte erlaubt.

Video

Konzept

Work in Progress

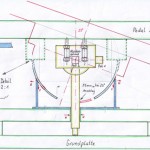

Prototypen

Setup

Vorarbeit

Vor der Entwicklung der maßgefertigten Fußeingabegeräten wurde in einer Vorarbeit ein Wii Balance Board als Eingabemodalität genutzt. Weitere Einzelheiten hierzu finden Sie in der später erschienenen Publikation Gaze and Feet as Additional Input Modalities for Interacting with Spatial Data.

Links

- Gaze Interaction – Interactive gaze-supported applications in the Post-WIMP world

- ACM SIGCHI Conference on Human Factors in Computing Systems

Publikationen

@inproceedings{Coltekin2016,

author = {Arzu Coltekin and Julia Hempel and Alzbeta Brychtova and Ioannis Giannopoulos and Sophie Stellmach and Raimund Dachselt},

editor = {Arzu Coltekin and Julia Hempel and Alzbeta Brychtova and Ioannis Giannopoulos and Sophie Stellmach and Raimund Dachselt},

title = {Gaze and Feet as Additional Input Modalities for Interacting with Spatial Data},

booktitle = {Proceedings of ISPRS Annals of Photogrammetry, Remote Sensing and Spatial Information Sciences},

volume = {III-2},

year = {2016},

month = {7},

location = {Prague, Czech Republic},

pages = {113--120},

numpages = {8},

doi = {10.5194/isprs-annals-III-2-113-2016},

url = {http://dx.doi.org/10.5194/isprs-annals-III-2-113-2016},

keywords = {Interfaces, User Interfaces, Multimodal Input, Foot Interaction, Gaze Interaction, GIS, Usability}

}Weitere Materialien

@inproceedings{klamka2015c,

author = {Konstantin Klamka and Andreas Siegel and Stefan Vogt and Fabian G\"{o}bel and Sophie Stellmach and Raimund Dachselt},

editor = {Konstantin Klamka and Andreas Siegel and Stefan Vogt and Fabian G\"{o}bel and Sophie Stellmach and Raimund Dachselt},

title = {Look \& Pedal: Hands-free Navigation in Zoomable Information Spaces through Gaze-supported Foot Input},

booktitle = {Proceedings of the 17th International Conference on Multimodal Interaction},

year = {2015},

month = {11},

location = {Seattle, USA},

pages = {123--130},

numpages = {8},

doi = {10.1145/2818346.2820751},

url = {http://dx.doi.org/10.1145/2818346.2820751},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {multimodal interaction, foot input, gaze input, eye tracking, gaze-supported interaction, navigation, pan, zoom}

}Weitere Materialien

@inproceedings{fixme,

author = {Fabian G\"{o}bel and Konstantin Klamka and Andreas Siegel and Stefan Vogt and Sophie Stellmach and Raimund Dachselt},

title = {Gaze-supported Foot Interaction in Zoomable Information Spaces (Interactivity)},

booktitle = {Proceedings of the Conference on Human Factors in Computing Systems - Extended Abstracts},

year = {2013},

month = {4},

location = {Paris, France},

pages = {3059--3062},

numpages = {4},

doi = {10.1145/2468356.2479610},

url = {http://doi.acm.org/10.1145/2468356.2479610},

publisher = {ACM},

keywords = {eye tracking, foot, gaze, interaction, multimodal, navigation, pan, zoom}

}Weitere Materialien

@article{GKS-2013-CHI-workshop,

author = {Fabian G\"{o}bel and Konstantin Klamka and Andreas Siegel and Stefan Vogt and Sophie Stellmach and Raimund Dachselt},

title = {Gaze-supported Foot Interaction in Zoomable Information Spaces},

booktitle = {CHI 2013 Workshop on Gaze Interaction in the Post-WIMP World},

year = {2013},

month = {4},

location = {Paris, France},

numpages = {4}

}Weitere Materialien