When working with zoomable information spaces, we can distinguish complex tasks into primary and secondary tasks (e.g., pan and zoom). In this context, a multimodal combination of gaze and foot input is highly promising for supporting manual interactions, for example, using mouse and keyboard. Motivated by this, we present several alternatives for multimodal gaze-supported foot interaction in a computer desktop setup for pan and zoom. While our eye gaze is ideal to indicate a user’s current point of interest and where to zoom in, foot interaction is well suited for parallel input controls, for example, to specify the zooming speed. Our investigation focuses on varied foot input devices differing in their degree of freedom (e.g., one- and two-directional foot pedals) that can be seamlessly combined with gaze input.

Video

Concept

Work in Progress

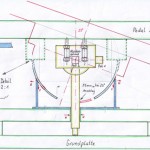

Prototypes

Setup

Preparatory Work

Before we built the custom-made foot pedals, a Wii Balance Board prototype was developed. For further information, please refer to the publication Gaze and Feet as Additional Input Modalities for Interacting with Spatial Data, which was however later published (in 2016) than the other work described here.

Related Links

- Gaze Interaction – Interactive gaze-supported applications in the Post-WIMP world

- ACM SIGCHI Conference on Human Factors in Computing Systems

Publications

@inproceedings{Coltekin2016,

author = {Arzu Coltekin and Julia Hempel and Alzbeta Brychtova and Ioannis Giannopoulos and Sophie Stellmach and Raimund Dachselt},

editor = {Arzu Coltekin and Julia Hempel and Alzbeta Brychtova and Ioannis Giannopoulos and Sophie Stellmach and Raimund Dachselt},

title = {Gaze and Feet as Additional Input Modalities for Interacting with Spatial Data},

booktitle = {Proceedings of ISPRS Annals of Photogrammetry, Remote Sensing and Spatial Information Sciences},

volume = {III-2},

year = {2016},

month = {7},

location = {Prague, Czech Republic},

pages = {113--120},

numpages = {8},

doi = {10.5194/isprs-annals-III-2-113-2016},

url = {http://dx.doi.org/10.5194/isprs-annals-III-2-113-2016},

keywords = {Interfaces, User Interfaces, Multimodal Input, Foot Interaction, Gaze Interaction, GIS, Usability}

}List of additional material

@inproceedings{klamka2015c,

author = {Konstantin Klamka and Andreas Siegel and Stefan Vogt and Fabian G\"{o}bel and Sophie Stellmach and Raimund Dachselt},

editor = {Konstantin Klamka and Andreas Siegel and Stefan Vogt and Fabian G\"{o}bel and Sophie Stellmach and Raimund Dachselt},

title = {Look \& Pedal: Hands-free Navigation in Zoomable Information Spaces through Gaze-supported Foot Input},

booktitle = {Proceedings of the 17th International Conference on Multimodal Interaction},

year = {2015},

month = {11},

location = {Seattle, USA},

pages = {123--130},

numpages = {8},

doi = {10.1145/2818346.2820751},

url = {http://dx.doi.org/10.1145/2818346.2820751},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {multimodal interaction, foot input, gaze input, eye tracking, gaze-supported interaction, navigation, pan, zoom}

}List of additional material

@inproceedings{fixme,

author = {Fabian G\"{o}bel and Konstantin Klamka and Andreas Siegel and Stefan Vogt and Sophie Stellmach and Raimund Dachselt},

title = {Gaze-supported Foot Interaction in Zoomable Information Spaces (Interactivity)},

booktitle = {Proceedings of the Conference on Human Factors in Computing Systems - Extended Abstracts},

year = {2013},

month = {4},

location = {Paris, France},

pages = {3059--3062},

numpages = {4},

doi = {10.1145/2468356.2479610},

url = {http://doi.acm.org/10.1145/2468356.2479610},

publisher = {ACM},

keywords = {eye tracking, foot, gaze, interaction, multimodal, navigation, pan, zoom}

}List of additional material

@article{GKS-2013-CHI-workshop,

author = {Fabian G\"{o}bel and Konstantin Klamka and Andreas Siegel and Stefan Vogt and Sophie Stellmach and Raimund Dachselt},

title = {Gaze-supported Foot Interaction in Zoomable Information Spaces},

booktitle = {CHI 2013 Workshop on Gaze Interaction in the Post-WIMP World},

year = {2013},

month = {4},

location = {Paris, France},

numpages = {4}

}List of additional material