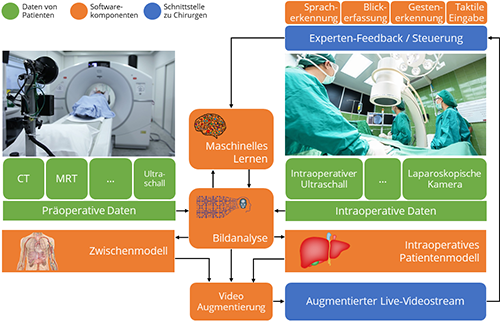

The ARAILIS project aims to make Augmented Reality (AR) and Artificial Intelligence (AI) usable for surgical interventions in order to improve minimally invasive surgery by combining pre- and intraoperative data.

Preoperative data from CT, MRI or ultrasound images are used to identify important structural information such as the location of tumors, blood vessels and resection lines. These form the basis for planning surgical interventions and support orientation and decision-making during the operation. Surgeons mainly rely on their (spatial) imagination to transfer the preoperative data to the current surgical situation during the operation. This increases the cognitive workload of surgeons, especially during laparoscopic procedures (minimally invasive surgery), as small camera views, limited depth vision, difficult hand-eye coordination and reduced tactile ability present additional challenges.

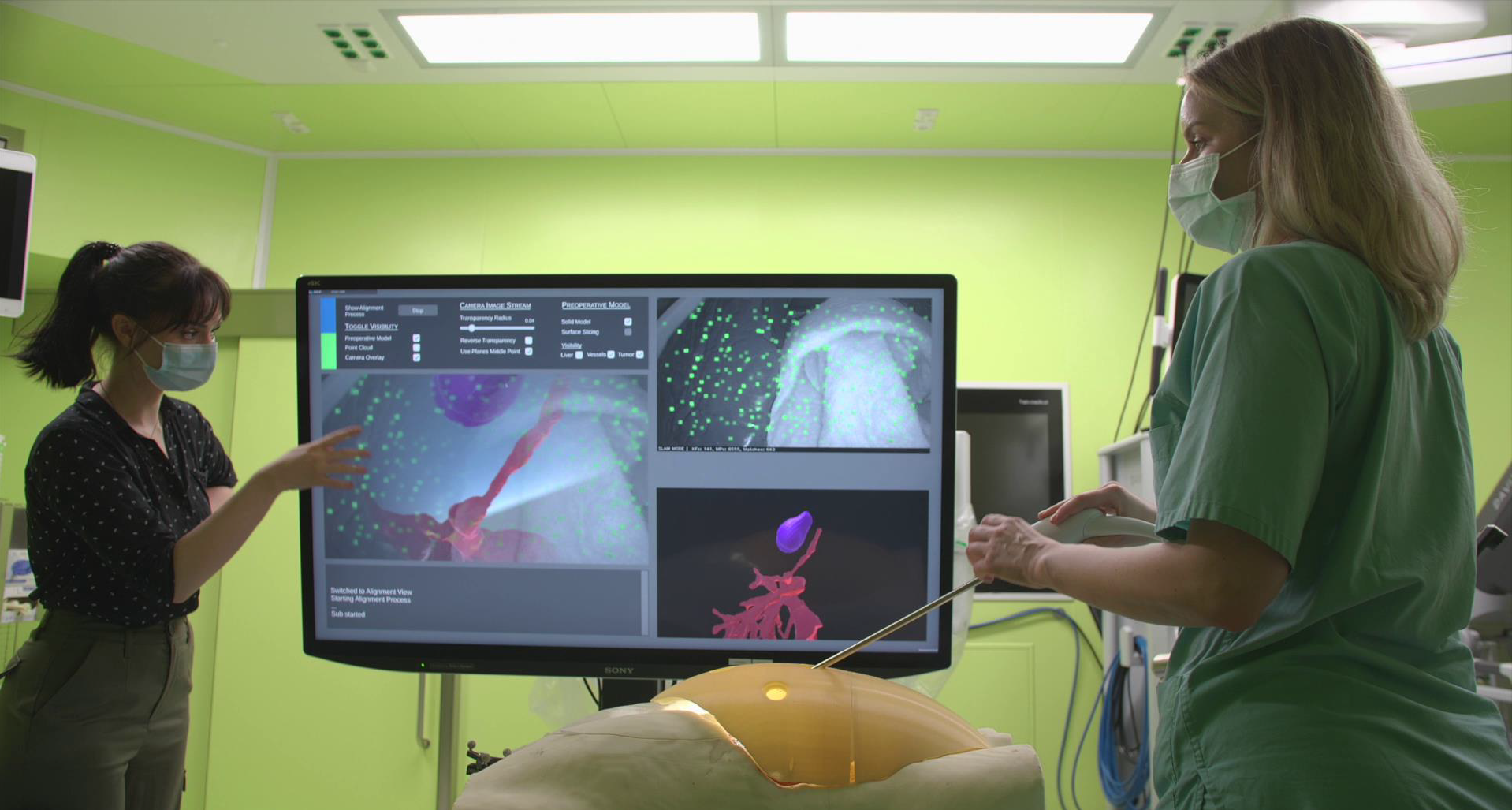

Since a minimally invasive procedure offers advantages for the patient, such as lower risk of infection, shorter hospital stay and smaller scars, the ARAILIS project aims to develop a system to support laparoscopic interventions. This system will merge and integrate existing preoperative data sets with live intraoperative data during surgery. Thus, innovative computer-assisted surgery will support intraoperative decision making by using Augmented Reality (AR) and Artificial Intelligence (AI). The overall goal is to reduce the risk of complications, reduce the need for follow-up care and thus increase patient safety.

Since a minimally invasive procedure offers advantages for the patient, such as lower risk of infection, shorter hospital stay and smaller scars, the ARAILIS project aims to develop a system to support laparoscopic interventions. This system will merge and integrate existing preoperative data sets with live intraoperative data during surgery. Thus, innovative computer-assisted surgery will support intraoperative decision making by using Augmented Reality (AR) and Artificial Intelligence (AI). The overall goal is to reduce the risk of complications, reduce the need for follow-up care and thus increase patient safety.

The ARAILIS research project brings together scientists from the TU Dresden, the Carl Gustav Carus University Hospital and the Medical Faculty of the TU Dresden as well as the National Center for Tumor Diseases Dresden. Raimund Dachselt‘s working group is mainly responsible for the conception and evaluation of the Human-Machine-Interface (AP 7). This development is based on the extensive previous research of the Interactive Media Lab Dresden on Information Visualization and Human-Computer Interaction.

Further information can be found on the ARAILIS project page of the Chair of Software Technology.

Related Publications

@inproceedings{krug2023point,

author = {Katja Krug and Marc Satkowski and Reuben Docea and Tzu-Yu Ku and Raimund Dachselt},

title = {Point Cloud Alignment through Mid-Air Gestures on a Stereoscopic Display},

booktitle = {Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems},

series = {CHI EA'23},

year = {2023},

month = {04},

location = {Hamburg, Germany},

doi = {10.1145/3544549.3585862},

publisher = {ACM},

address = {New York, NY, USA}

}List of additional material

@inproceedings{fixme,

author = {Wolfgang B\"{u}schel and Katja Krug and Konstantin Klamka and Raimund Dachselt},

title = {Demonstrating CleAR Sight: Transparent Interaction Panels for Augmented Reality},

booktitle = {Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems},

year = {2023},

month = {2},

isbn = {978-1-4503-9422-2/23/04},

location = {Hamburg, Germany},

pages = {1--5},

numpages = {5},

doi = {10.1145/3544549.3583891},

publisher = {ACM}

}

ROS-based Image Guidance Navigation System for Minimally Invasive Liver Surgery

@inproceedings{docea22,

author = {Reuben Docea and Jan M\"{u}ller and Katja Krug and Matthias Hardner and Paul Riedel and Micha Pfeiffer and Martin Menzel and Fiona R. Kolbinger and Laura Frohneberg and J\"{u}rgen Weitz and Stefanie Speidel},

title = {ROS-based Image Guidance Navigation System for Minimally Invasive Liver Surgery},

booktitle = {RosCon Kyoto 2022},

year = {2022},

month = {10},

location = {Kyoto, Japan},

publisher = {Open Robotics}

}List of additional material

@inproceedings{fixme,

author = {Katja Krug and Wolfgang B\"{u}schel and Konstantin Klamka and Raimund Dachselt},

title = {CleAR Sight: Exploring the Potential of Interacting with Transparent Tablets in Augmented Reality},

booktitle = {Proceedings of the 21st IEEE International Symposium on Mixed and Augmented Reality},

series = {ISMAR '22},

year = {2022},

month = {10},

isbn = {978-1-6654-5325-7/22},

location = {Singapore},

pages = {196--205},

doi = {10.1109/ISMAR55827.2022.00034},

publisher = {IEEE}

}List of additional material

@inproceedings{mueller22,

author = {Jan M\"{u}ller and Reuben Docea and Matthias Hardner and Katja Krug and Paul Riedel and Ronald Tetzlaff},

title = {Fast High-Resolution Disparity Estimation for Laparoscopic Surgery},

booktitle = {Proceedings of IEEE Biomedical Circuits and Systems Conference 2022},

year = {2022},

month = {10},

location = {Taipei, Taiwan},

doi = {10.1109/BioCAS54905.2022.9948563},

publisher = {IEEE}

}List of additional material

@inproceedings{docea22,

author = {Reuben Docea and Micha Pfeiffer and Jan M\"{u}ller and Katja Krug and Matthias Hardner and Paul Riedel and Martin Menzel and Fiona R. Kolbinger and Laura Frohneberg and J\"{u}rgen Weitz and Stefanie Speidel},

title = {A Laparoscopic Liver Navigation Pipeline with Minimal Setup Requirements},

booktitle = {Proceedings of IEEE Biomedical Circuits and Systems Conference 2022},

year = {2022},

month = {10},

location = {Taipei, Taiwan},

doi = {10.1109/BioCAS54905.2022.9948587},

publisher = {IEEE}

}List of additional material

@inproceedings{fixme,

author = {Weizhou Luo and Eva Goebel and Patrick Reipschl\"{a}ger and Mats Ole Ellenberg and Raimund Dachselt},

title = {Demonstrating Spatial Exploration and Slicing of Volumetric Medical Data in Augmented Reality with Handheld Devices},

booktitle = {2021 IEEE International Symposium on Mixed and Augmented Reality},

year = {2021},

month = {10},

location = {Bari, Italy}

}List of additional material

@inproceedings{luo2021exploring,

author = {Weizhou Luo and Eva Goebel and Patrick Reipschl\"{a}ger and Mats Ole Ellenberg and Raimund Dachselt},

title = {Exploring and Slicing Volumetric Medical Data in Augmented Reality Using a Spatially-Aware Mobile Device},

booktitle = {2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)},

year = {2021},

month = {10},

location = {Bari, Italy},

doi = {10.1109/ISMAR-Adjunct54149.2021.00076},

publisher = {IEEE}

}