We introduce a set of smartphone-based techniques for 3D panning and zooming in AR and present the results of a controlled lab experiment regarding efficiency and user preferences.

Table of Contents

Paper @ MobileHCI ’19

Info The paper presenting our techniques, study setup and results was presented at the 21st International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI 2019) which took place October 1-4, 2019 in Taipei, Taiwan.

Abstract

We investigated mobile devices as interactive controllers to support the exploration of 3D data spaces in head-mounted Augmented Reality (AR). In future mobile contexts, applications such as immersive analysis or ubiquitous information retrieval will involve large 3D data sets, which must be visualized in limited physical space. This necessitates efficient interaction techniques for 3D panning and zooming. Smartphones as additional input devices are promising because they are familiar and widely available in mobile usage contexts. They also allow more casual and discreet interaction compared to free-hand gestures or voice input.

We introduce smartphone-based pan & zoom techniques for 3D data spaces and present a user study comparing five techniques based on spatial interaction and/or touch input. Our results show that spatial device gestures can outperform both touch-based techniques and hand gestures in terms of task completion times and user preference.

Proposed Techniques

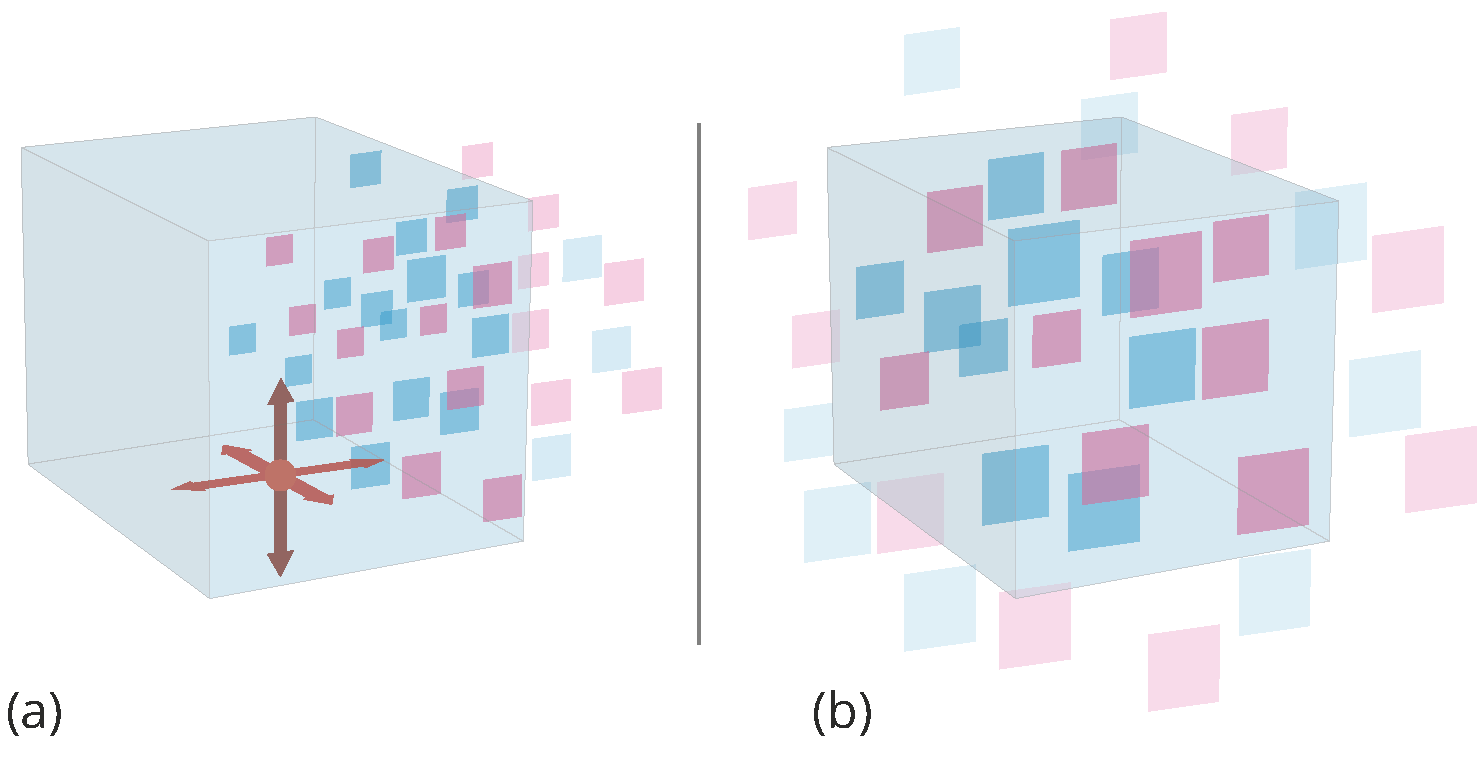

In an iterative process, we identified a) four applicable phone-based solutions for panning (Move, Rotate, 2D-Drag with plane selection, 2D-Drag with orthogonal translation) and b) three for zooming (Move, Rotate, Drag) which can be combined to 12 compound techniques. We selected a subset of four techniques (Move+Drag, Move+Rotate, Drag+Drag, DragRotate+Drag), depicted below, supplemented by the HoloLens AirTap gesture on the left as baseline technique.

- Move+Drag (M+D): This technique is a combination of moving the device in free-space as direct input for 3D translation of the data space and a touch-based indirect input for zooming through an up/down-drag gesture (zoom in/out) on the phone’s touch screen.

- Move+Rotate (M+R): Quite similar to M+D, this technique uses free-space input by moving the device for 3D translation of the data space. Zooming is realized by rotating the smartphone to the left (zoom out) and right (zoom in) along the forward facing axis.

- Drag+Drag (D+D): Here we combine 2D-Drag on either the XZ-, YZ- or XY-plane with tracking the discrete device orientation for plane selection. A double tap is used as mode switch to enable zooming through an up/down-drag gesture (zoom in/out).

- DragRotate+Drag (DR+D): Similar to D+D, this technique provides 2D-drag but just for translation in the XZ-plane (horizontal), while rotating the phone along the forward facing axis simultaneously translates the data space up and down on the Y-axis. As in D+D, a double tap is used to enable zooming through an up/down-drag gesture (zoom in/out).

The selection criteria were based on the following design goals (left), aiming at a wide coverage of the following design dimensions (right).

Design Goals

- Unimanual Input: to allow for the use of additional tools, e.g., for selection or inspection of items

- Eyes-free Interaction: to keep the users‘ visual focus to the AR visualization and create minimum distraction

- Smartphone-only Implementation: using inertial sensors or the device camera to provide spatial awareness

- High Degree of Compatibility: physical action should resemble the response as much as possible

- Robustness and Conciseness: avoid that actions (accidentally or by their nature) interfere with each other or are misapplied, e.g., because of fatigue

Design Dimensions

- Degree of spatiality (D1): How many DoF are controlled through spatial input?

- Degree of simultaneity (D2): How many DoF can be controlled in parallel/simultaneously?

- Degree of guidance (D3): How many DoF are controlled through gestures with some sort of alignment to give guidance to the user?

Study Design and Results

Our goal was to gain insights into different 3D pan & zoom techniques and their combination for the exploration of a 3D data space. Particularly, we wanted to assess our five selected techniques regarding their performance in speed, efficiency, learning effects, and fatigue.

We designed a user study as a controlled lab experiment. The independent variables were interaction technique, task type, and target zoom level. The dependent variables were task completion time and efficiency (the ratio of the shortest translation path and the actual path). We chose a within-subject design where each participant completed all tasks with all techniques. The order of the techniques was counterbalanced, the order of the tasks for each technique was randomized.

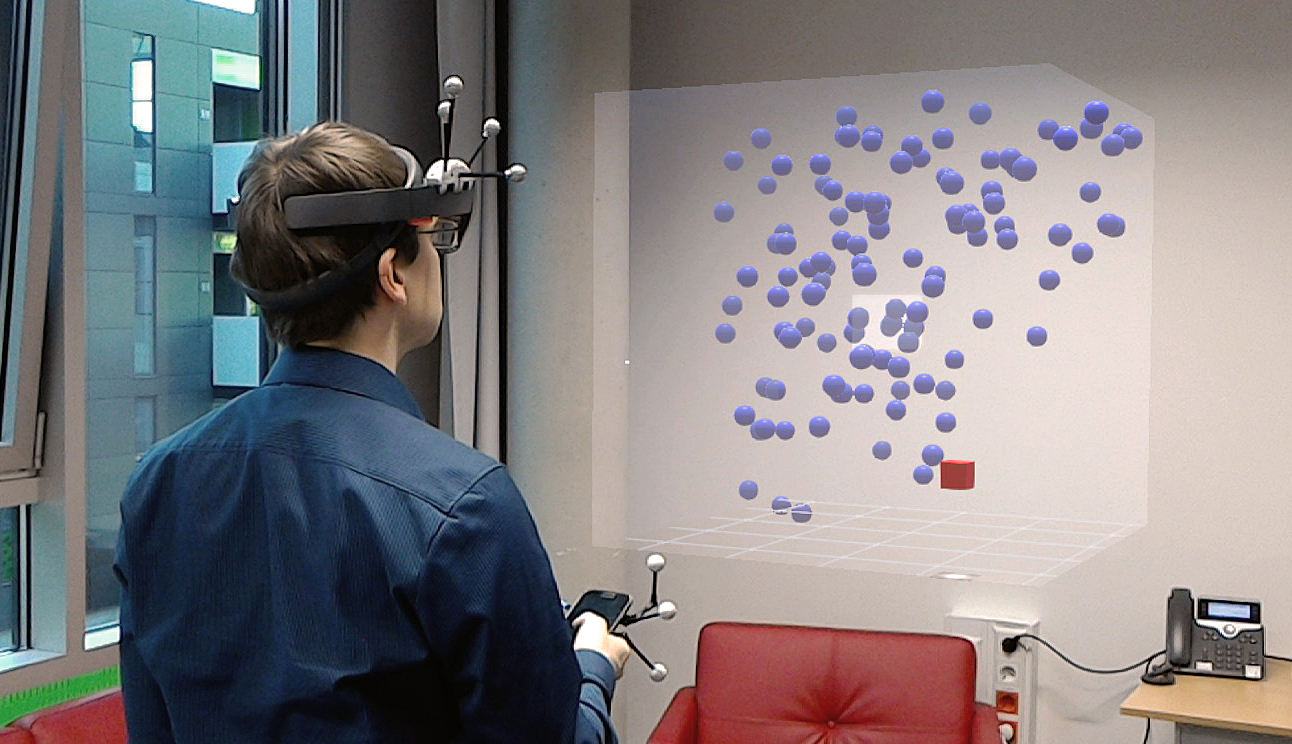

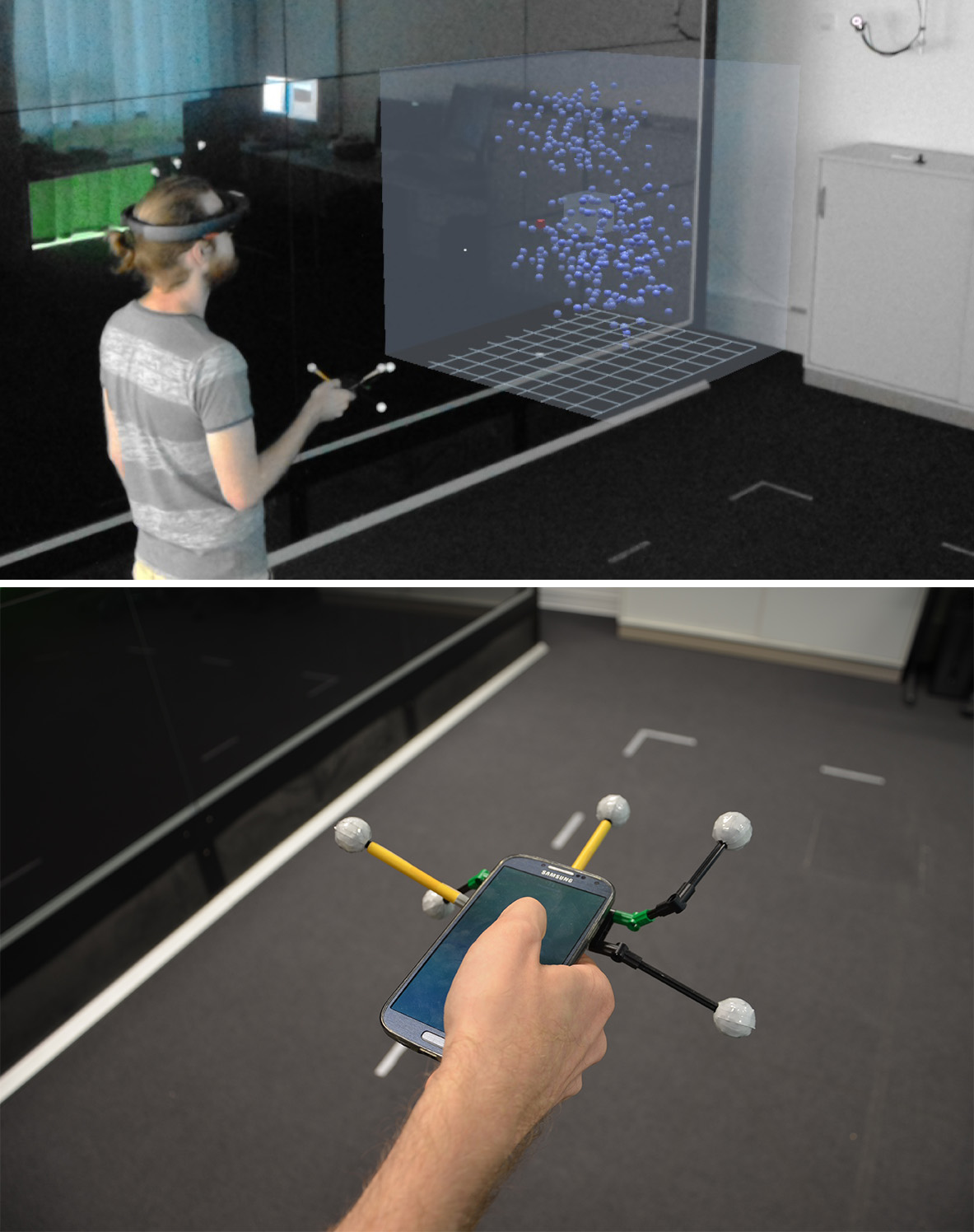

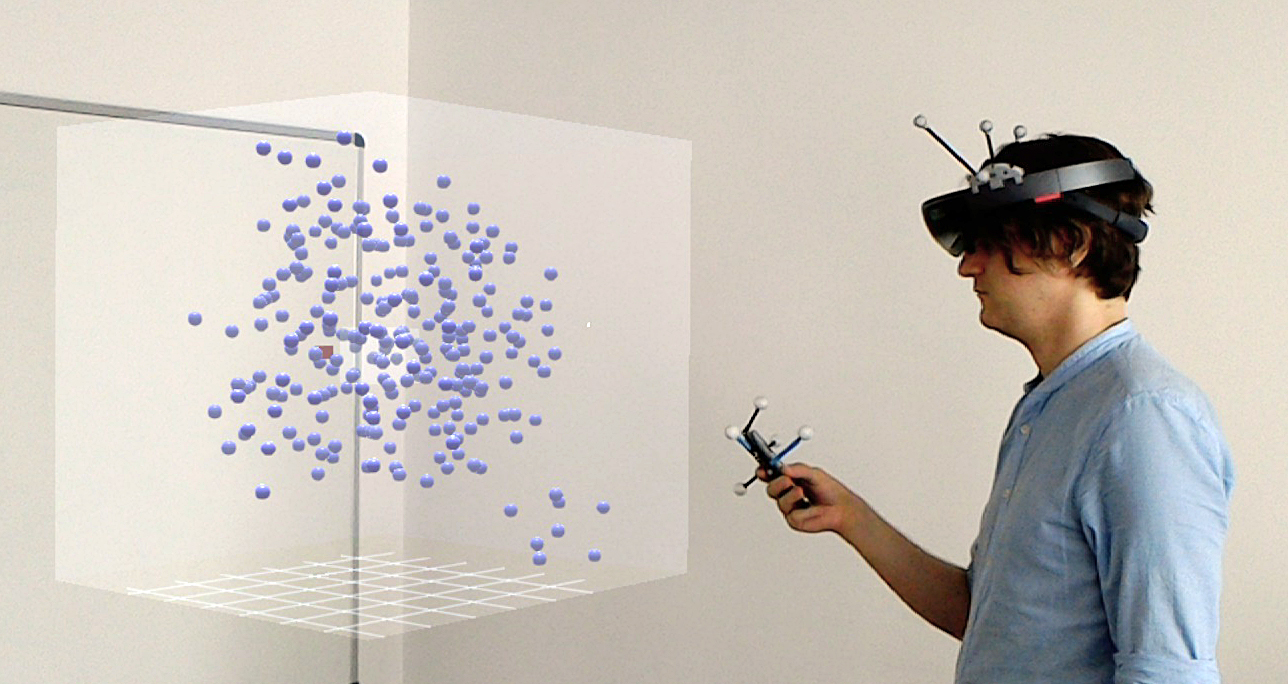

We recruited 28 participants from an entry level HCI course at the local university, 25 of which completed the study successfully. The results of three participants had to be excluded because of technical and personal issues. 15 participants were male, 10 were female. Their mean age was 21.8 (SD=3.5). All had normal or corrected-to-normal vision. Our experimental setup included a Microsoft HoloLens and a smartphone. In order to bring both devices into a common coordinate system and allow for precise and solid data logging and analysis, we attached IR markers to them and tracked both devices with an IR tracking system.

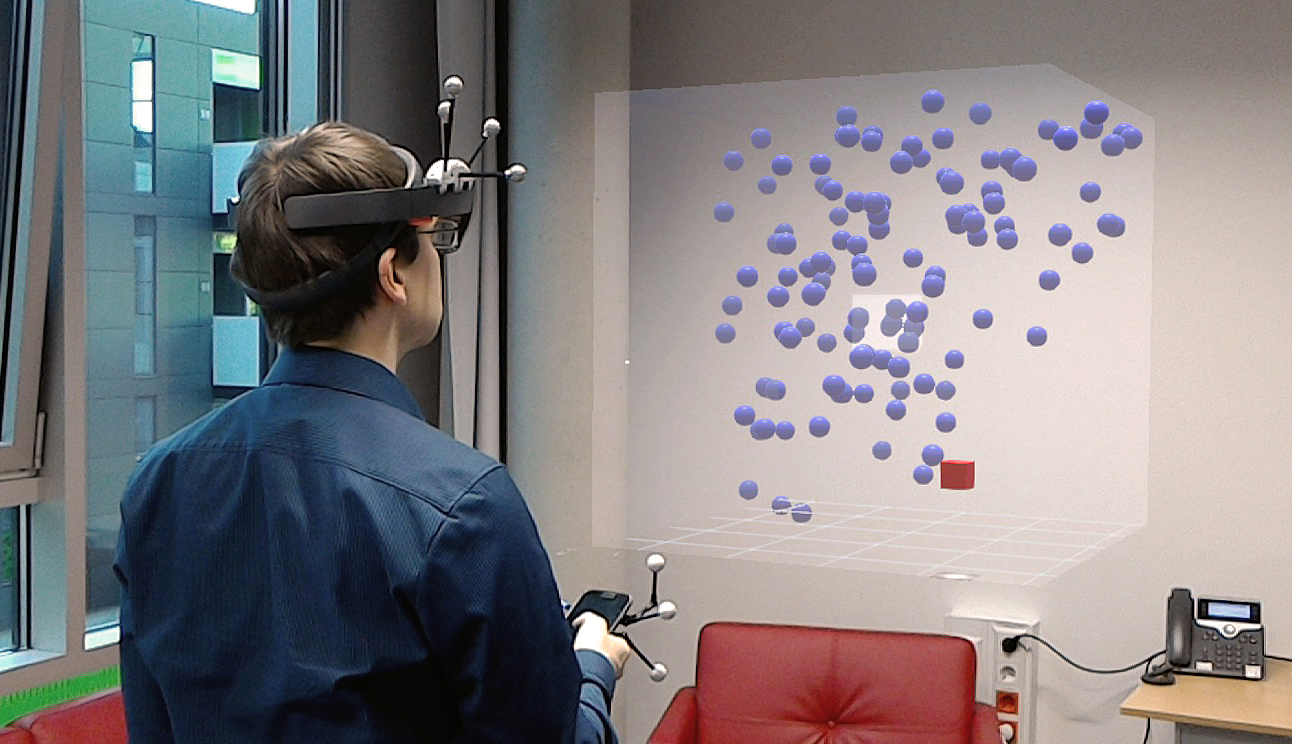

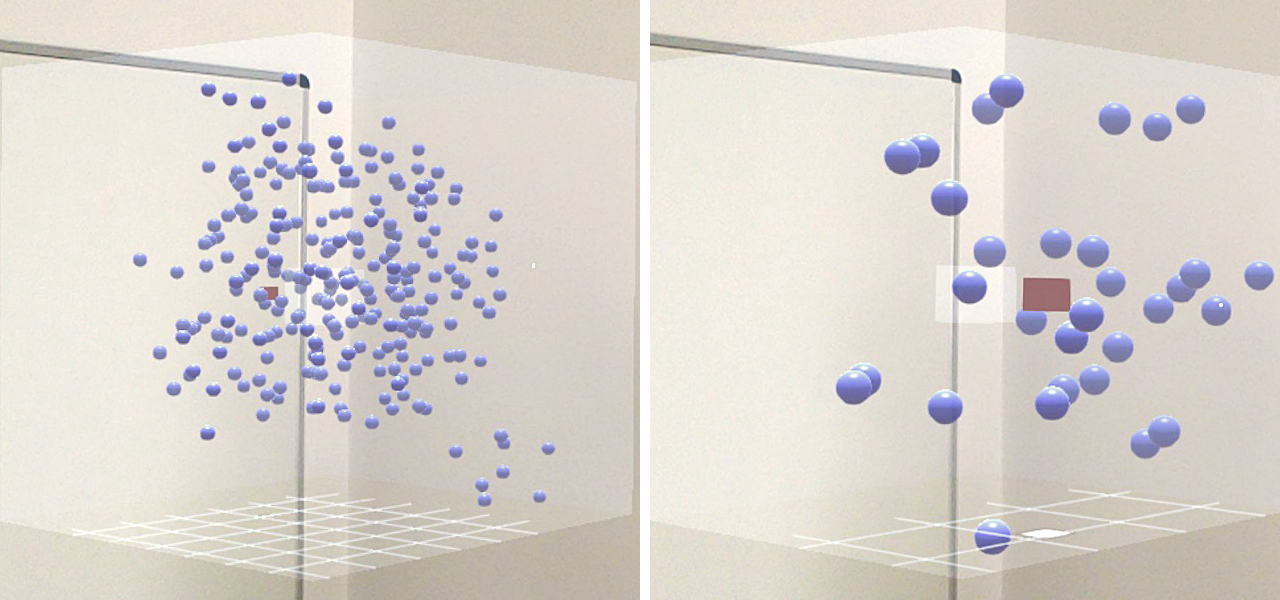

The AR scene presented to the user consisted of a virtual cube representing a zoomable information space in the form of a clipping volume for a larger scene, effectively serving as a volumetric window into this data space. It was filled with randomly positioned clusters of spheres that served as both a depth cue and as mock „data items“. In the center of the cube, a target volume was highlighted. For each trial, a small red cube was placed at predefined locations in the scene. The goal of each task was to move this target object into the target volume in the center of the presentation space and adjust its size to the target volume. To this end, participants had to pan & zoom the scene.

We logged task completion times as well as the target position and scale over time. We also logged the position and orientation of the HoloLens and, if applicable, the phone.

Info Download study data: Link

Publications

@inproceedings{bueschel2019,

author = {Wolfgang B\"{u}schel and Annett Mitschick and Thomas Meyer and Raimund Dachselt},

title = {Investigating Smartphone-based Pan and Zoom in 3D Data Spaces in Augmented Reality},

booktitle = {Proceedings of the 21st International Conference on Human-Computer Interaction with Mobile Devices and Services},

series = {MobileHCI ’19},

year = {2019},

month = {10},

isbn = {9781450368254},

location = {Taipei, Taiwan},

pages = {1--13},

numpages = {13},

doi = {10.1145/3338286.3340113},

url = {https://doi.org/10.1145/3338286.3340113},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {Augmented Reality, 3D Navigation, 3D Data Exploration, Pan \& Zoom, Interaction Techniques, Immersive Visualization}

}

Related Student Theses

Smartphone-Unterstützung für die Interaktion mit Ergebnisvisualisierungen in Augmented Reality

Thomas Meyer 15. Juni 2018 bis 16. November 2018

Betreuung: Wolfgang Büschel, Annett Mitschick, Raimund Dachselt