With Augmented Dynamic Data Physicalization, we present concepts and implementations for combining dynamic Data Physicalizations with Augmented Reality to create interactive data visualizations.

Abstract

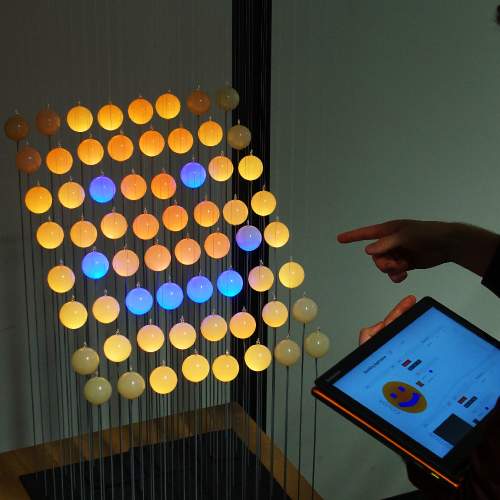

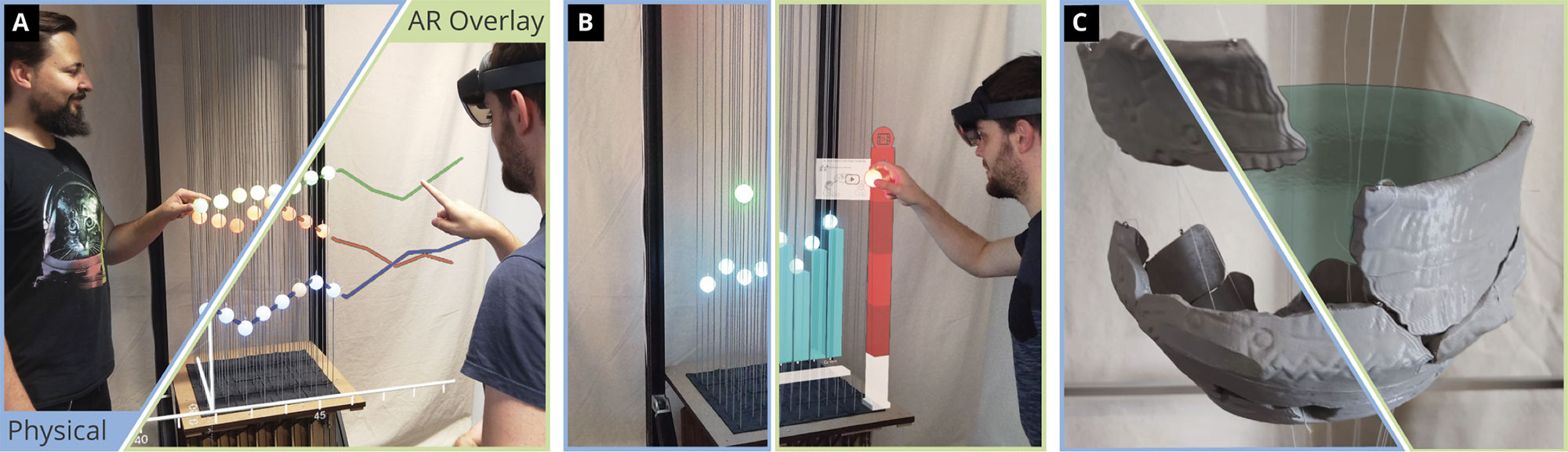

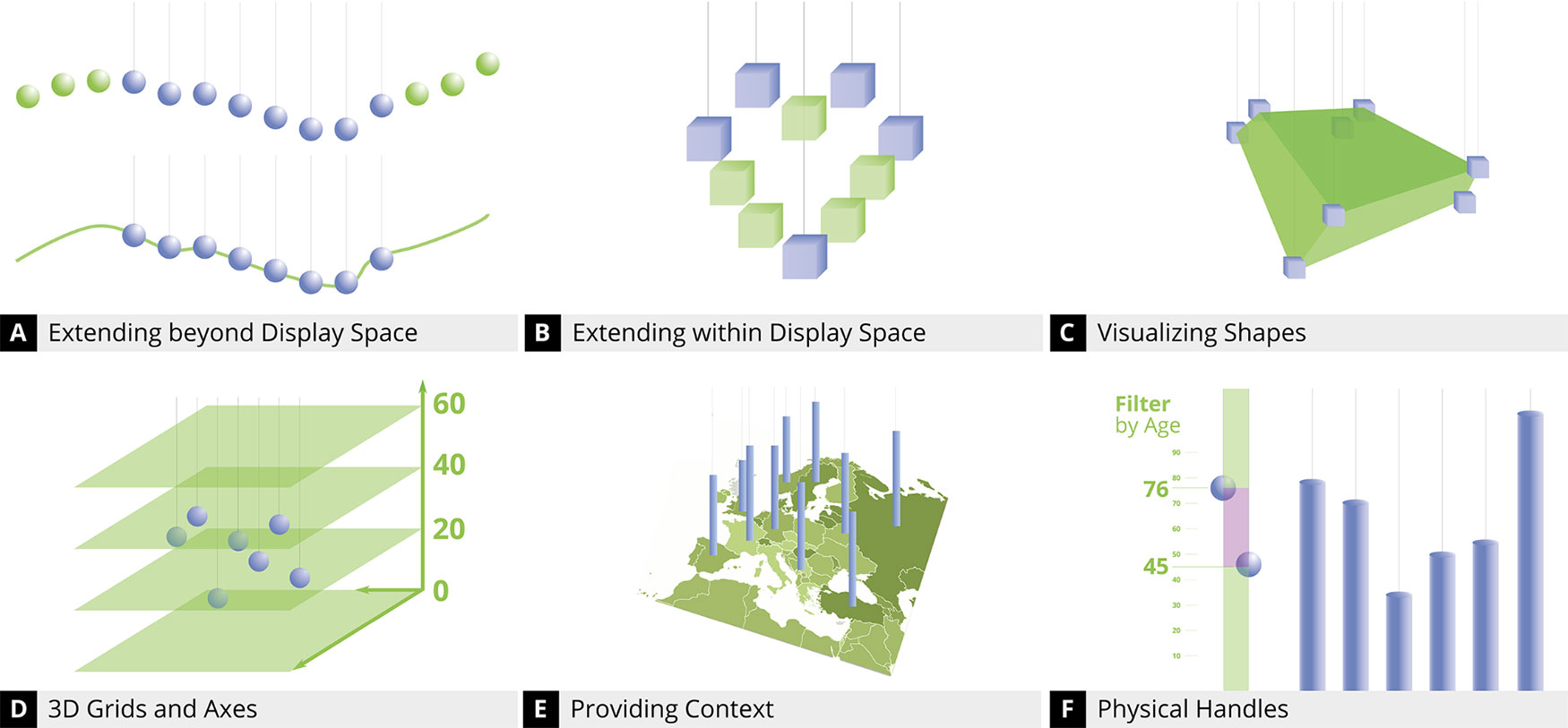

We investigate the concept of Augmented Dynamic Data Physicalization, the combination of shape-changing physical data representations with high-resolution virtual content. Tangible data sculptures, for example using mid-air shape-changing interfaces, are aesthetically appealing and persistent, but also technically and spatially limited. Blending them with Augmented Reality overlays such as scales, labels, or other contextual information opens up new possibilities. We explore the potential of this promising combination and propose a set of essential visualization components and interaction principles. They facilitate sophisticated hybrid data visualizations, for example Overview & Detail techniques or 3D view aggregations. We discuss three implemented applications that demonstrate how our approach can be used for personal information hubs, interactive exhibitions, and immersive data analytics. Based on these use cases, we conducted hands-on sessions with external experts, resulting in valuable feedback and insights. They highlight the potential of combining dynamic physicalizations with dynamic AR overlays to create rich and engaging data experiences.

STRAIDE

This is a follow-up project of STRAIDE. Please also have a look at the detailed project website.

Publication

@article{Engert:2025:ADP,

author = {Severin Engert and Andreas Peetz and Konstantin Klamka and Pierre Surer and Tobias Isenberg and Raimund Dachselt},

title = {Augmented Dynamic Data Physicalization: Blending Shape-changing Data Sculptures with Virtual Content for Interactive Visualization},

journal = {IEEE Transactions on Visualization and Computer Graphics},

volume = {31},

issue = {10},

year = {2025},

month = {3},

location = {Vienna, Austria},

pages = {7580--7597},

doi = {10.1109/TVCG.2025.3547432},

publisher = {IEEE}

}List of additional material

Media

Presentation

Coming soon …

Figures

All figures from the article can be found in the corresponding OSF repository.

Related Publications

@inproceedings{Engert2022,

author = {Severin Engert and Konstantin Klamka and Andreas Peetz and Raimund Dachselt},

title = {STRAIDE: A Research Platform for Shape-Changing Spatial Displays based on Actuated Strings},

booktitle = {Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems},

series = {CHI '22},

year = {2022},

month = {4},

isbn = {978-1-4503-9157-3/22/04},

location = {New Orleans, LA, USA},

numpages = {16},

doi = {10.1145/3491102.3517462},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {Prototyping Platform, Spatial Display, Shape-Changing Interface, Tangible Interaction, Casual Visualization, Data Physicalization}

}List of additional material

Acknowledgments

We thank the anonymous reviewers for their valuable comments and suggestions that helped us to improve this article. We also thank our external experts for taking part in our study and providing their feedback. This work was funded in part by

- the DFG as part of Germany’s Excellence Strategy EXC 2050/1 – Project ID 390696704 – Cluster of Excellence “Centre for Tactile Internet with Human-in-the-Loop” (CeTI),

- the DFG as part of Germany’s Excellence Strategy EXC-2068 – Project ID 390729961 – Cluster of Excellence “Physics of Life” (PoL) of Technische Universität Dresden,

- DFG grant 389792660 as part of TRR 248 – CPEC (see cpec.science),

- the Federal Ministry of Education and Research of Germany (BMBF) in the program of “Souverän. Digital. Vernetzt.”, joint project 6G-life, project ID number: 16KISK001K,

- the Federal Ministry of Education and Research of Germany (BMBF) (SCADS22B) and the Saxon State Ministry for Science, Culture and Tourism by funding the competence center for Big Data and AI “ScaDS.AI Dresden/Leipzig.”